Estimating robot pose and joint angles is significant in robotics, enabling applications like robot collaboration and online hand-eye calibration. However, the introduction of unknown joint angles makes prediction more complex than simple robot pose estimation, due to its higher dimensionality. Previous methods either regress 3D keypoints directly or utilise a render&compare strategy. These approaches often falter in terms of performance or efficiency and grapple with the cross-camera gap problem. This paper presents a novel framework that bifurcates the high-dimensional prediction task into two manageable subtasks: 2D keypoints detection and lifting 2D keypoints to 3D. This separation promises enhanced performance without sacrificing the efficiency innate to keypoint-based techniques. A vital component of our method is the lifting of 2D keypoints to 3D keypoints. Common deterministic regression methods may falter when faced with uncertainties from 2D detection errors or self-occlusions. Leveraging the robust modeling potential of diffusion models, we reframe this issue as conditional 3D keypoints generation. To bolster cross-camera adaptability, we introduce the Normalised Camera Coordinate Space (NCCS), ensuring alignment of estimated 2D keypoints across varying camera intrinsics. Experimental results demonstrate that the proposed method outperforms the state-of-the-art render&compare method and achieves higher inference speed. Furthermore, the tests accentuate our method's robust cross-camera generalisation capabilities.

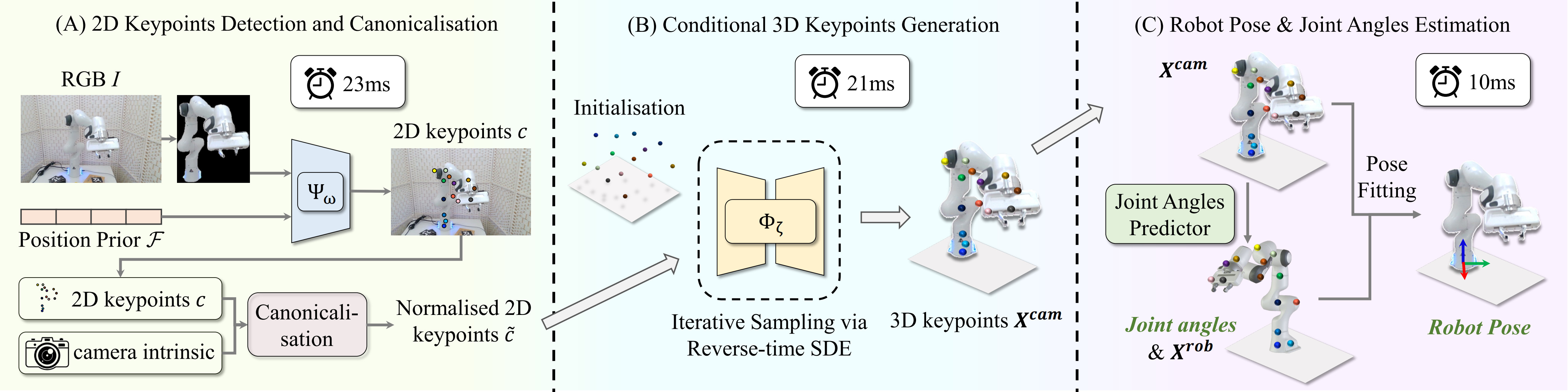

The inference pipeline of RoboKeyGen. (A) Combined with the RGB image I, predicted segmentation mask and positional embedding prior F, we firstly predict 2D keypoints c through the detection network Ψω. (B) Conditioning on 2D detections, we generate 3D Xcam via the score network Φζ. (C) Finally, we predict joint angles from Xcam and recover Xrob based on URDF files. We do pose fitting between Xcam and Xrob to acquire the robot pose.

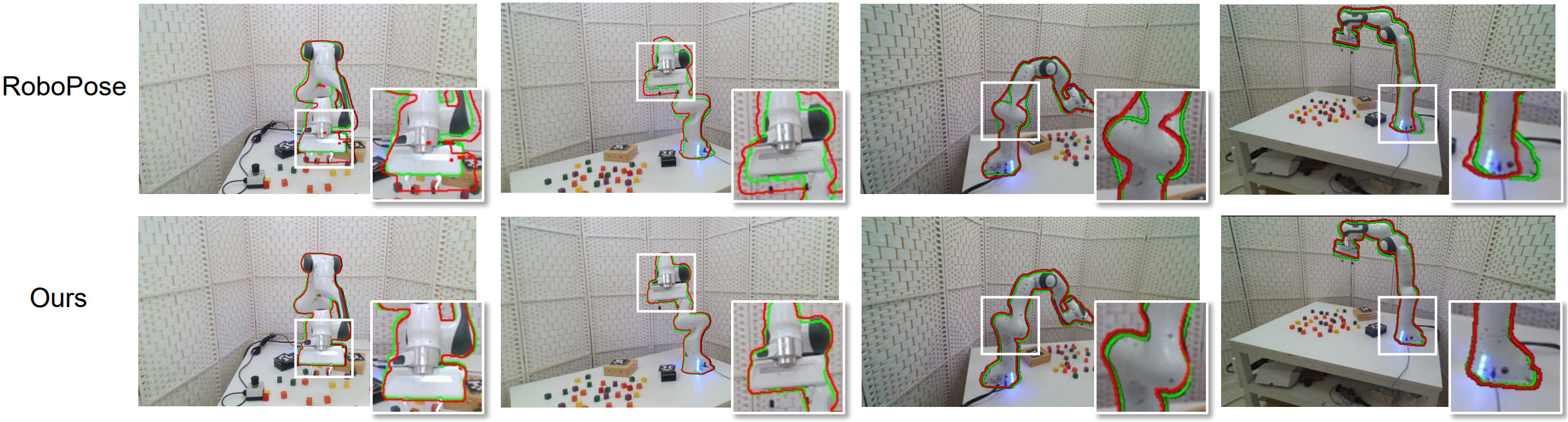

To validate our method's superiority, here we demonstrate the visualization results on real-world data in the scene with (static / dynamics) cameras, where the robot pose remains unchanged. Green lines are connected by ground truth keypoints while the red lines are connected by predicted ones. The white margins are the edge of the mask rendered by estimated robot pose and joint angles. Since SPDH doesn't predict joint angles, we only draw ours and RoboPose's. We can easily observe our method provides a more stable and accurate prediction compared to the state-of-the-art baselines.

Ours

Robopose

SPDH

To validate our method's superiority, here we demonstrate the visualization results on real-world data in the scene with (static / dynamics) cameras, where the robot pose remains unchanged. Green lines are connected by ground truth keypoints while the red lines are connected by predicted ones. The white margins are the edge of the mask rendered by estimated robot pose and joint angles. Since SPDH doesn't predict joint angles, we only draw ours and RoboPose's. We can easily observe our method provides a more stable and accurate prediction compared to the state-of-the-art baselines.

Ours

SPDH